Emobject

Emotions are able to extend beyond personal feelings, offering omnipresent instrument for producing profound interaction opportunities. Emobject is an interactive interface that reflects surrounding emotions and controls external devices in response to the emotional input. As an exploration to sensory substitution, we blur the boundary between physical and digital through emotion recognition and feedback control system. In this paper, we illustrate face perceptions, our design and implementations, and possible applications such as social mood monitor, home automation device, and development platform for creative expression.

Introduction

This project initially started out as an exploration into sensory substitution, emotion hacking, and the possibility of integrating interactive interfaces with human emotions. Could everyday objects convey information through emotions? Could we receive information about the state of an object or the state of a given environment through emotions; and in this way could we better internalize and relate to that information? What if we could seamlessly map digital information onto emotions, via a physical, tangible device that conveys a range of emotions through facial expressions, and then be able to monitor the dynamics of that information through the dynamics of the corresponding emotions? Moreover, could we also do the opposite – being able to control external devices or the state of different environments through changes of emotions / facial expressions? We developed a device that makes the aforementioned scenarios possible, and we called it Emobject.

Emobject is a novel, interactive, tangible interface that bridges the gap between the worlds of bits, atoms, and emotions. It is a physical manifestation of a digital emoji with rich input, output, and control capabilities. It allows mapping of physical and digital information into emotional expressions, as well as controlling external devices through change of the device's facial expressions. Moreover, Emobject can also detect faces and emotions of people by leveraging the latest emotion recognition algorithms developed at MIT Media Lab, and then adapt accordingly, mirror the emotions in real time, or control another device in response to the emotional input. Emobject is a universal emotion-based I/O device enabling a host of novel user experiences.

Background

Face perception is a rapid, subconscious process wired into our brains even before birth. We are in fact so great at recognizing faces and expressions, that we often perceive them even in the most mundane objects like house facades, power outlets, landscapes, and even grilled toasts. This phenomenon is called facial pareidolia, and it is an important survival mechanism. Moreover, we are much faster at perceiving faces or face-like objects (165ms after stimulus onset) than perceiving other objects, and we can perceive faces even in our peripheral vision. Could we hack this neurological phenomenon and exploit the highly efficient facial processor in our brain for tasks for which it was never intended? Could we perceive other critical information as rapidly and/or as subconsciously as we perceive faces?

Prior research into sensory substitution has shown that that when a new kind of information stream is mapped into haptic stimulus, through a vibrotactile device, users wearing the device develop subconscious awareness for that new information stream after some time. Examples of this include the VEST, which enables deaf people to subconsciously perceive speech mapped into patterns of vibrotactile stimulation on their back. Another example is a vibrotactile belt that enables users to develop subconscious awareness for the direction of North. But could similar results of subconscious awareness for some information stream be achieved without requiring users to wear any devices at all, by using visual stimulus in the form of facial expressions rather than haptic stimulus?

Implementation

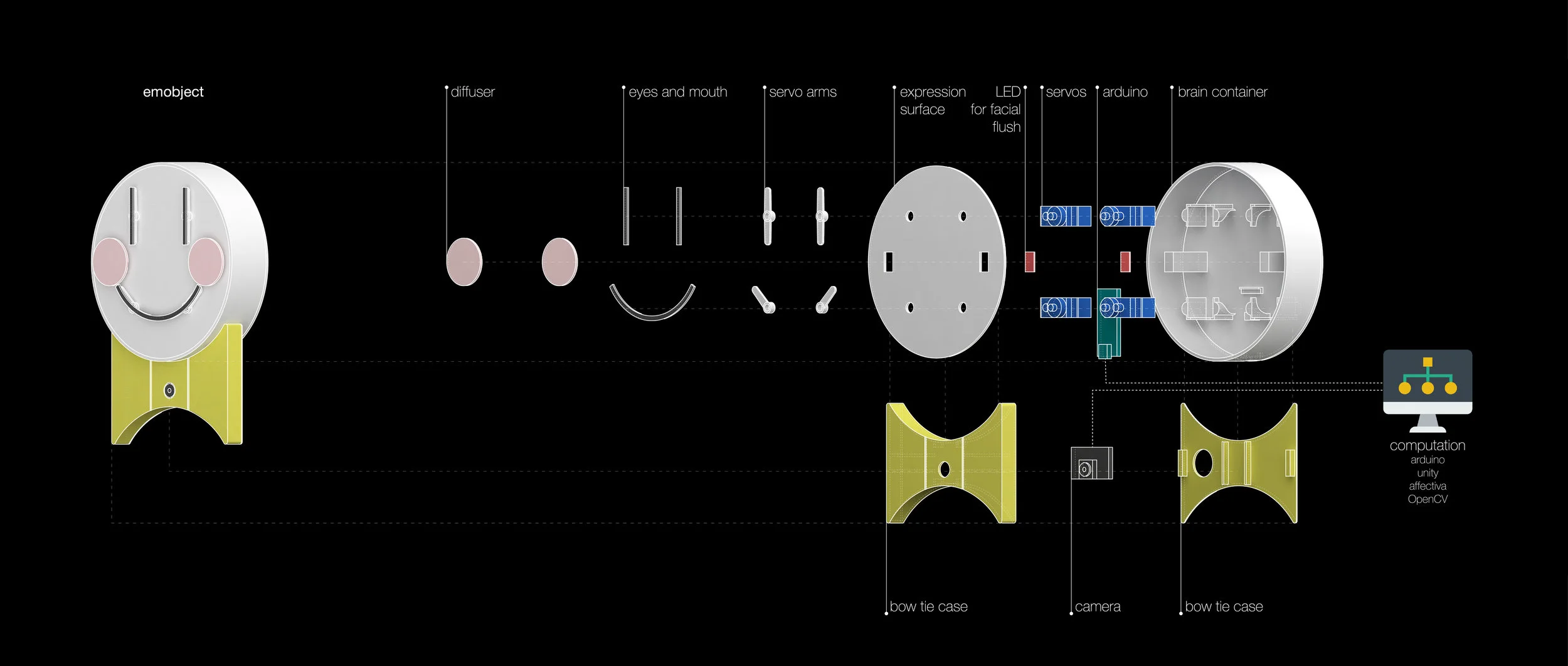

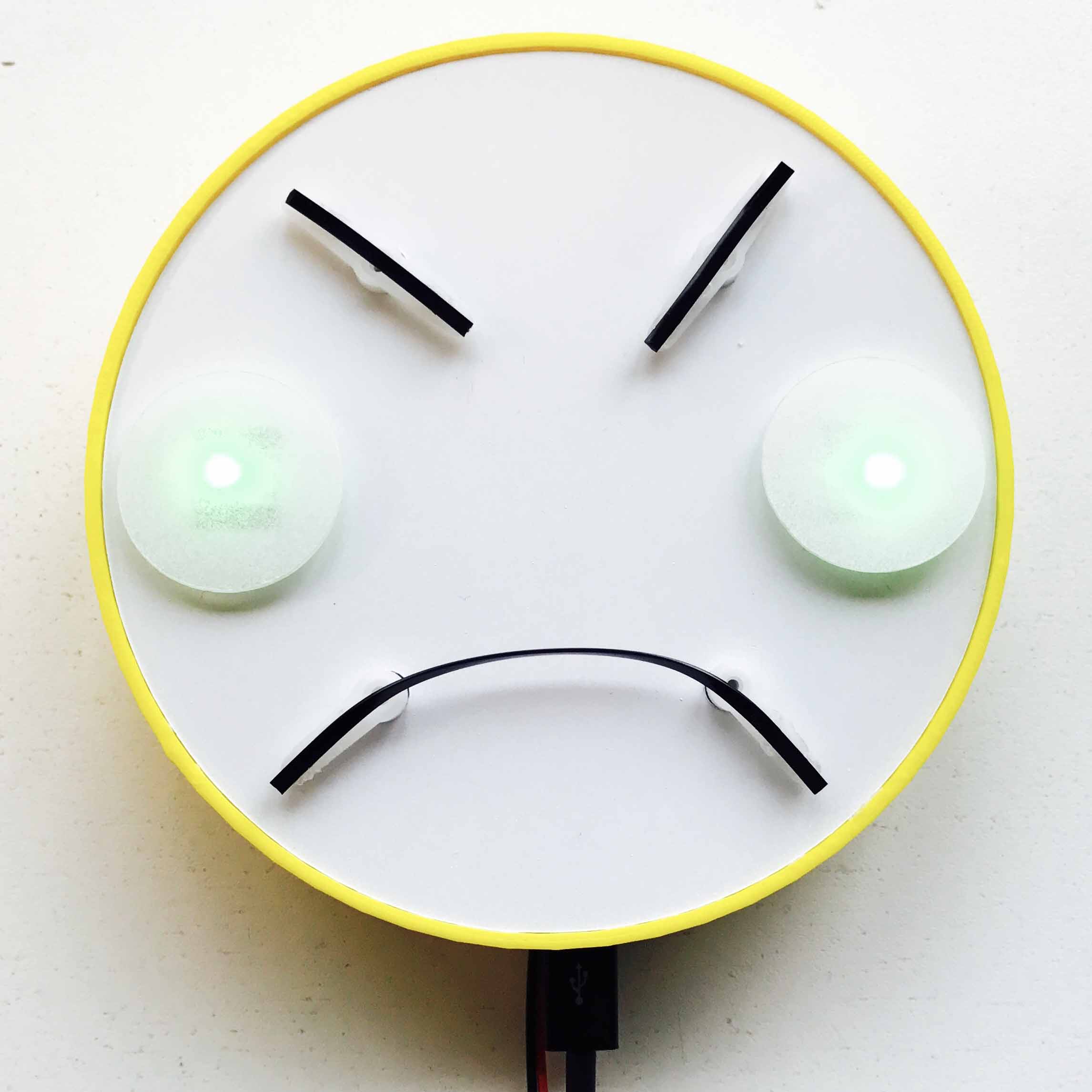

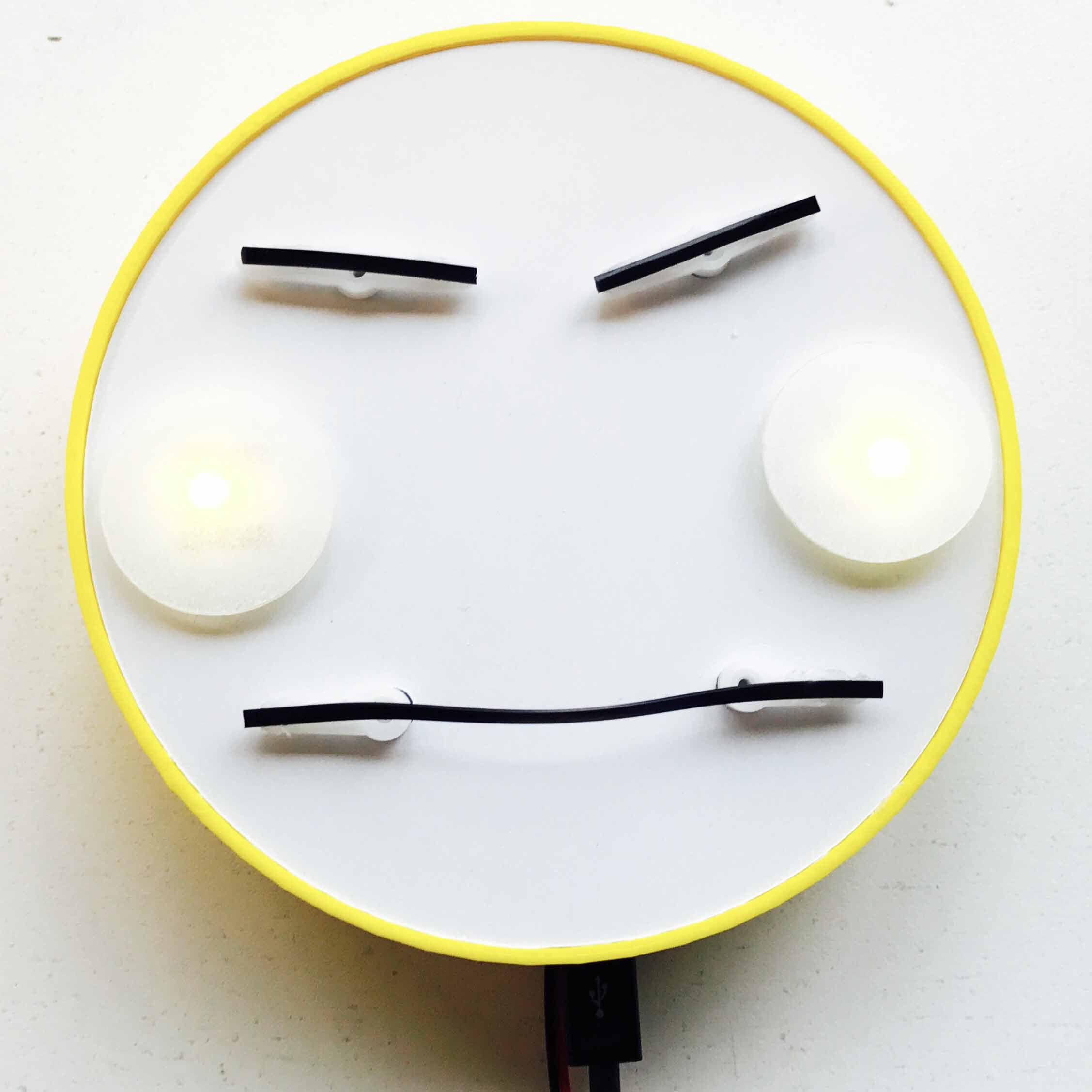

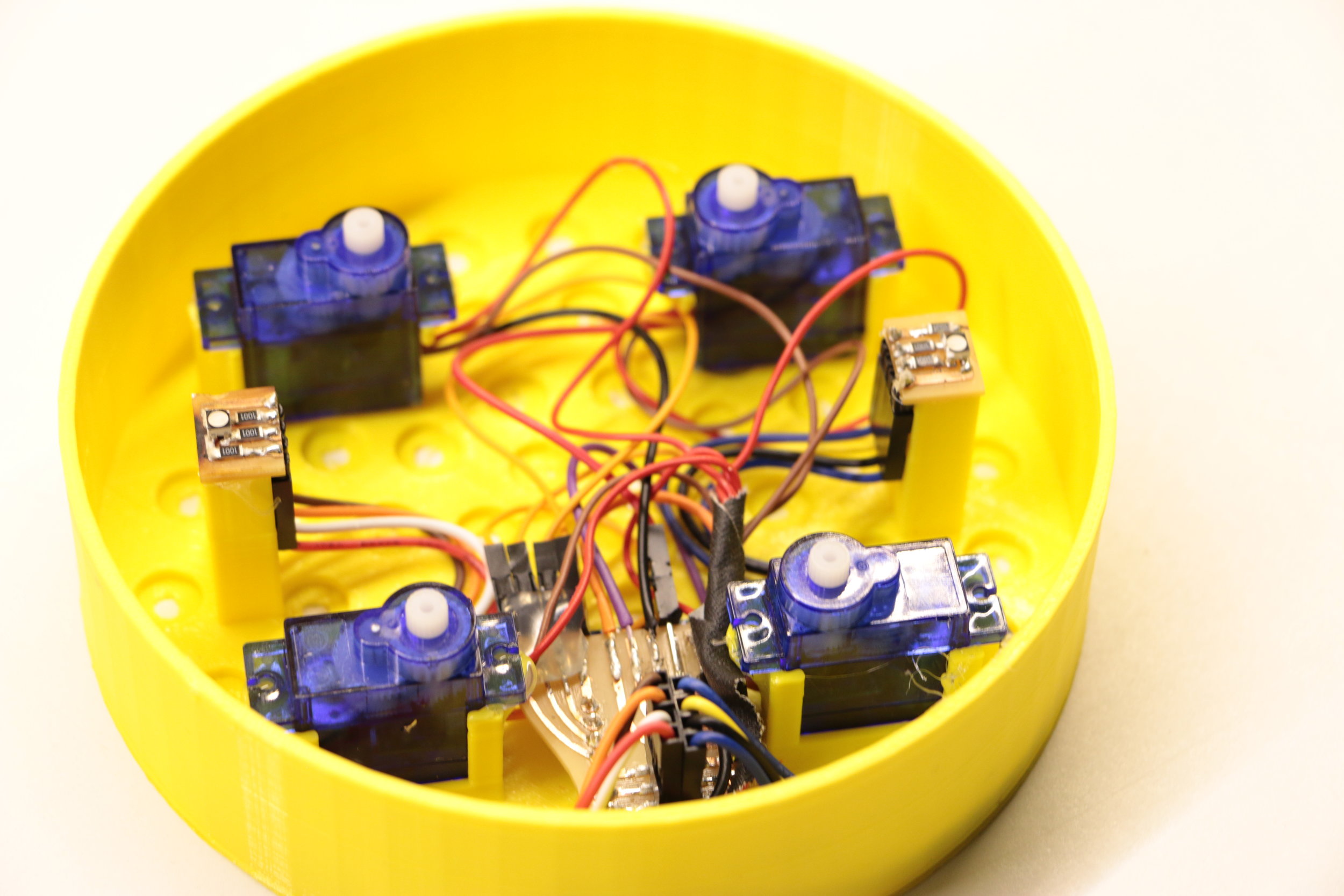

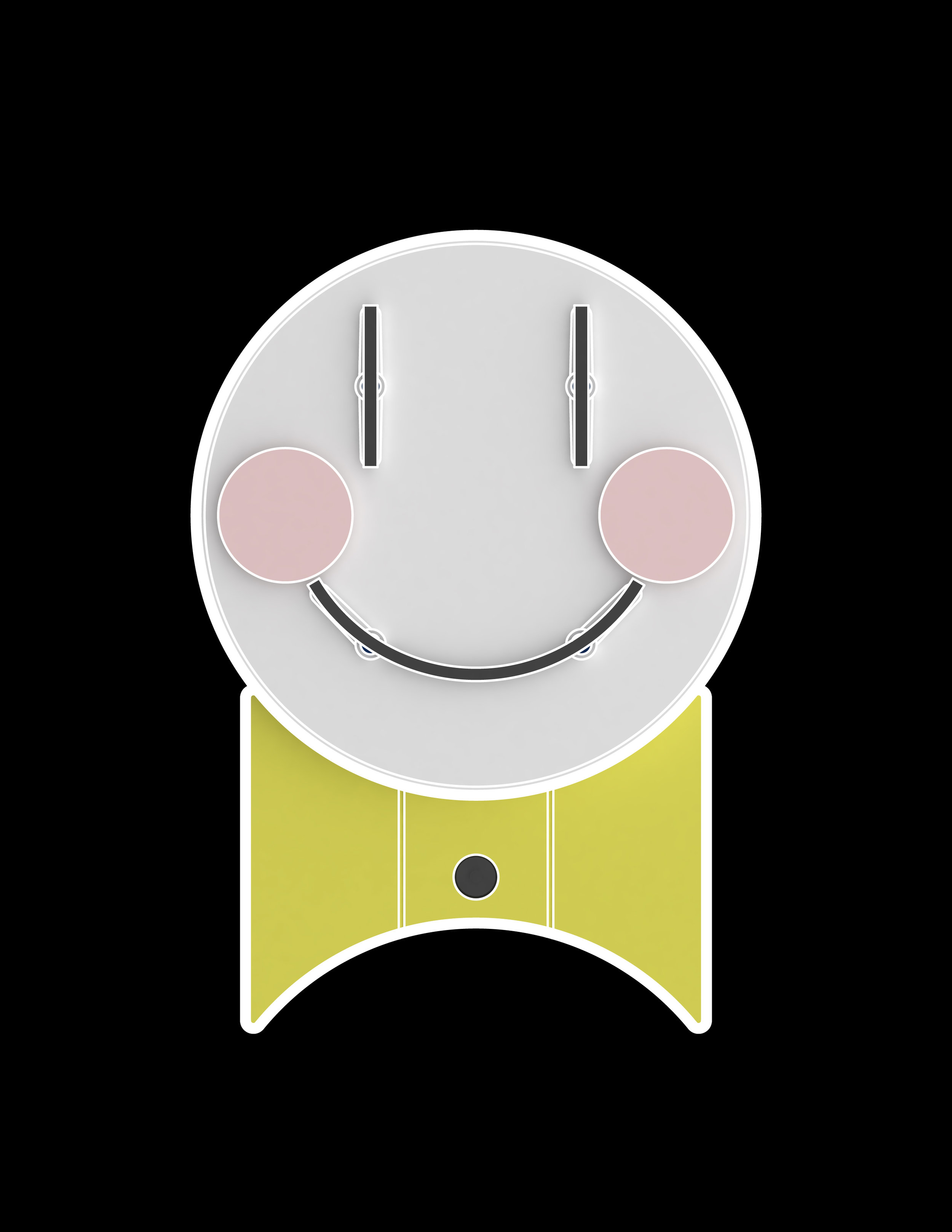

The prototype of our system consists of 3D printed components in the form of a face and a bowtie, four servo motors which we converted into feedback servos (by soldering a wire to the internal potentiometer), a microcontroller, a custom-made driver board, an HD webcam, and two RGB LEDs with custom-made diffusers. The 3D printed parts serve the dual purpose of giving the physical form of the Emobject, as well as providing a housing for all of the electronics and electromechanical components. The servo motors are mounted symmetrically relative to center of the face – two for the mouth, and one for each eye. An RGB LED and diffuser are mounted on each cheek to enable additional facial expression such as blushing (red) or rage (green). The camera is mounted inside the bowtie.

The feedback servos and the HD webcam provide two mechanisms through which the Emobject can serve as an input device. The first way is through manually rotating the feedback servos by hand and then reading the analog data from each servomotor to determine the resulting facial expression. The second way is by capturing the visual feed from the webcam and then running facial processing algorithms to extract the facial expression / emotion of a person. These two input streams could then be used in a variety of ways including for feedback purposes, for storage or remote transmission, and for controlling external devices.

We developed a number of software tools that afford the aforementioned use cases for Emobject as an input device. We wrote a controller program in Processing that receives the analog servo data over serial port, and then make HTTP requests to control a device on the Internet. We also developed software for processing the webcam data stream. By integrating Unity and Affectiva, we were able to retrieve emotion data from facial expressions. Moreover, by also incorporating OpenCV, we were able to do facial tracking and emotion recognition for multiple faces.

Applications

Social Mood Monitor and Display - Emobject analyzes the emotions of multiple faces simultaneously and then displays the average emotion. As a social mood monitor and display, Emobject could alert another person whether joining the conversation would be appropriate or not.

Instant Emotional Feedback Mirror - Emobject can provide users with immediate, tangible feedback about their facial expression. When made aware about their instant facial expressions, users may choose to smile more frequently, which has been shown to elicit happiness.

Notification Interface - Users can use a number of Emobjects for notification and control applications. When the laundry machine is finished for example, or when the lights are turned without need, or when the thermostat is set to too high or too low are all examples of informational alerts that could be provided through facial expressions on different Emobjects. Since we are so much better and faster at perceiving faces, when these alerts are delivered in the form a facial expressions, we might be much more likely to notice them and act upon them.

Control Interface - Emobject could be used as a home automation device. A user may associate a particular emotion with a set of lighting conditions, temperature, and music for instance, and another emotion with another set of conditions. If all of these devices are automated, the user could just change the emotion on the Emobject and set the parameters corresponding to that emotion. We've used Emobject to control the lights in the Media Lab 3rd floor atrium.

Emotion Recording and Playback Device - What was the range of facial expressions and emotion you went through today? What if you could play back your facial expressions in a time-lapsed fashion; would you be surprised at what you see? Would it provoke you to take a certain action or make behavioral change?

Average Personal Expression Reflector - Emobject can be attached to a bathroom mirror to monitor your emotional state every time you face the mirror, and then display your average emotional expression during the past week, and the variation in emotions over time. When presented with this information, users would have the motivation to smile more often.

Conclusions

We believe that our system offers new forms of social affordances through emotion sensing and feedback controlling. It expands human sensory by visualize the microscopic perception through the most perceivable facial expression. As illustrated in the applications section, Emobject can be applied across a variety of domains for connecting personal feelings with the community and external devices. We envision a world that objects can convey information through emotions to express themselves and embody the environment.

Advisor: Hiroshi Ishii

Collaborators: Qi Xiong, Ali Shtarbanov, Difei Chen, Siya Takalkar